Weekly Dose of Optimism #179

Opus 4.6, GPT-5.3-Codex, As Rocks May Think, Mouse Brain Computers, Waymo, Contrary Tech Trends + 4 Extra Doses

Hi friends 👋,

Happy Friday and welcome back to the 179th Weekly Dose of Optimism!

We started writing the Weekly Dose during the 2022 bear market because there was a disconnect between the incredible things we saw being built and the (largely market-driven) pessimism. So this week is great. We were born in the darkness.

Even as the markets have vomited, the innovation has continued apace. Zoom out.

We have another jam-packed week of optimism, including four Extra Doses below the fold for not boring world members.

Let’s get to it.

Today’s Weekly Dose is brought to you by… Guru

Your team is probably already using AI for everything: research, customer support, product decisions. Just one problem… AI is confidently wrong about your company knowledge 40% of the time.

While everyone races to deploy more AI tools, they’re building on a foundation of outdated wikis, scattered documents, and tribal knowledge that was never meant to power automated decisions.

Guru solved this for companies like Spotify and Brex. They built the only AI verification system that automatically validates company knowledge before your AI agents use it. Think of it as quality control for your AI’s brain.

The companies that figure this out first will have AI that actually works. The ones that don’t waste valuable human time cleaning up expensive mistakes.

(1) Introducing Claude Opus 4.6 and Introducing GPT-5.3-Codex

Anthropic and OpenAI, respectively

The race between Anthropic and OpenAI to build the smartest, most useful thinking machines is heating up, and it’s riveting. The day after Anthropic released its Super Bowl commercials, which make fun of OpenAI for planning to introduce ads into its product (which many people, including Jordi Hays, think are a bit deceptive, but which are super entertaining)…

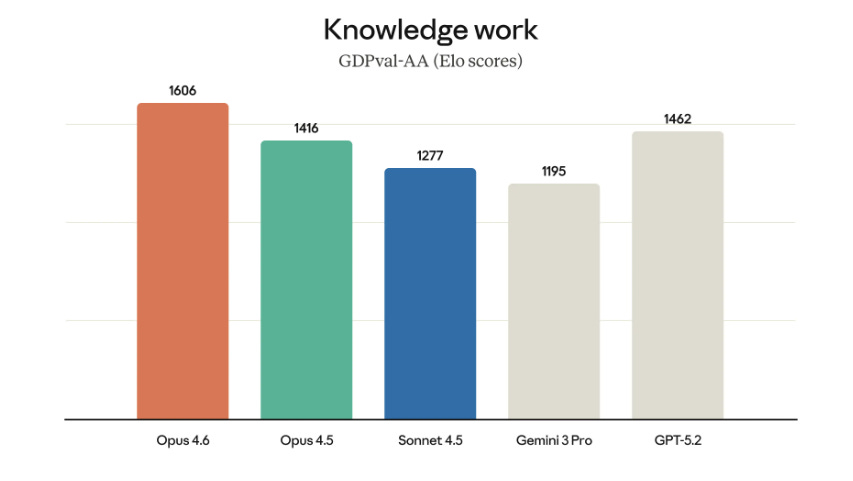

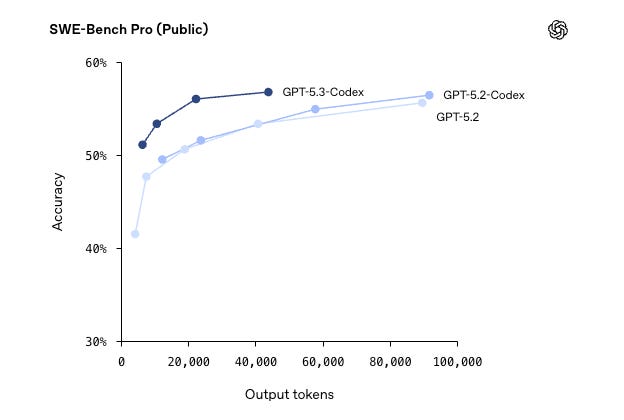

… both companies dropped their newest, smartest models. Anthropic released Opus 4.6 and OpenAI released GPT-5.3-Codex (Codex is its coding model/app).

Anthropic’s Opus 4.6 is for everyone: better at coding, plans longer, runs financial analyses, does research, etc… I’ve been playing with it and it’s definitely smarter (although thankfully it’s still a shitty writer).

OpenAI’s very-OpenAI-named GPT-5.3-Codex is for coding. It slots right into the Codex app they released this week. I had 5.2 build a website for not boring, and it was very cool that it could build it, but no matter how hard I prompted, the design was trash. I told 5.3 to throw out that trash and make me something that looked better, and it actually did a decent job in one shot. It can also do things like make models and presentations and docs, although it’s not available in Chat yet.

In both cases, researchers at the labs used their own agents to help research and build the new models. “Taken together,” OpenAI writes, “we found that these new capabilities resulted in powerful acceleration of our research, engineering, and product teams.” This is the mechanism that fast takeoff believers believe in: models so smart that they make the next models smarter, and so on.

I don’t know what to say other than have fun playing with your new geniuses this weekend.

Eric Jang

Whenever logical processes of thought are employed — that is, whenever thought for a time runs along an acceptive groove — there is an opportunity for the machine.

— Dr. Vannevar Bush, As We May Think, 1945

How’d we get here?

Eric Jang is VP of AI at 1X Technologies, the humanoid robotics company, and before that spent six years at Google Brain robotics where he co-led the team behind SayCan. He’s one of the people building the robots we covered in my robotics cossay with Evan Beard a few weeks ago.

His new essay, As Rocks May Think, is a riff on Vannevar Bush’s 1945 classic, As We May Think, and the title is the thesis: we taught rocks to think, and they’re getting really smart.

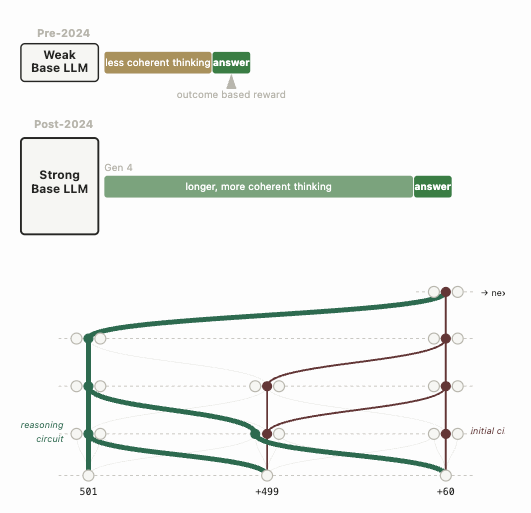

The piece is part technical history, part practical manual, and it is pretty technical, but it’s the most concise overview of how we got to where we are today and where we might be going from here that I’ve come across. Jang walks through the intellectual lineage of machine reasoning, from symbolic logic systems that collapsed when a single premise was wrong, through Bayesian belief nets that got tripped up in compounding uncertainty, to AlphaGo’s breakthrough combination of deductive search and learned intuition, and finally to today’s reasoning models, like Opus 4.6 and GPT 5.3.

For the practical manual piece, Jang walks through building his own AlphaGo and how he uses AI today: “Instead of leaving training jobs running overnight before I go to bed, I now leave "research jobs" with a Claude session working on something in the background. I wake up and read the experimental reports, write down a remark or two, and then ask for 5 new parallel investigations.”

He suspects we’ll al have access to today’s researcher-level of compute soon, and that when we do, we are going to need a shit-ton of compute. He compares thinking machines to air conditioning, a technology that Lee Kuan Yew credited with changing the nature of civilization by making the tropics productive. Air conditioning currently consumes 10% of global electricity. Data centers consume less than 1%. If automated thinking creates even a fraction of the productivity gains that climate control did, the demand for inference compute is going to be enormous.

Maybe that’s why Google anticipates $185 billion in 2026 CapEx spend and Amazon anticipates an even more whopping $200 billion, which sent its stock tumbling after hours.

The sell-off is ugly, but if Jang is right, all of that buildout and much more is going to be put to use. I asked my thinking rock (Claude Opus 4.6) what it thinks about the selloff. It told me: “if the bottleneck is inference compute, build the data centers. Vertical integration, baby.”

(3) Drone Controlled by Cultured Mouse Brain Cells Enters Anduril AI Grand Prix

Palmer Luckey

Don’t count thinking cells out yet, though!

Anduril’s AI Grand Prix, a drone racing competition, has strict rules: identical drones, no hardware mods, AI software flies. Over 1,000 teams signed up in the first 24 hours to compete for $500,000 and a job at Anduril.

Then one team showed up planning to use a biological computer built from cultured mouse brain cells to fly their drone.

Mouse brain cells. Australian company Cortical Labs commercially launched the CL1 last year: a $35,000 device that fuses lab-grown neurons with silicon chips. The neurons are grown on electrode arrays, kept alive in a life-support housing, and learn tasks through electrical stimulation. In 2022, the team placed 800,000 human and mouse brain cells on a chip and taught the network to play Pong in five minutes. The neurons run on a few watts and learn from far less data than conventional AI.

So: is a mouse brain “software”? Who cares.

“At first look, this seems against the spirit of the software-only rules. On second thought, hell yeah.”

(4) Waymo Raises $16 Billion, Now Does 400,000 Rides a Week

Waymo

Speaking of autonomous vehicles… Alphabet’s self-driving car company has way mo’ money at its disposal to save lives.

Nearly 40,000 Americans died in traffic crashes last year. The leading causes, things like distraction, impairment, fatigue, are all fundamentally human problems. Waymo doesn’t have those problems. It’s safer than human drivers, and the faster we get more of them (and other self-driving cars) on the road, the better.

Luckily, the company just raised $16 billion, which is basically a seed round in AI and is like 10% of what any serious hyperscaler is planning to spend on CapEx this year, but which will mean a lot more self-driving cars on the road. The round values Waymo at $126 billion and brings total funding to ~$27 billion. The investor list suggests that if they keep doing their job, there’s plenty more where that came from: Sequoia, a16z, DST Global, Dragoneer, Silver Lake, Tiger Global, Fidelity, T. Rowe Price, Kleiner Perkins, and Temasek, alongside majority investor Alphabet. This is the largest private investment ever in an autonomous vehicle company.

We’re talking a lot about fast takeoffs this week, and Waymo is a case study in gradually, then suddenly.

Waymo started in 2009 as a secret Google project, with a handful of engineers modifying a Toyota Prius to drive itself on the Golden Gate Bridge. For years, the punchline was that self-driving cars were always five years away. Google spent $1.1 billion between 2009 and 2015 and had essentially nothing to sell for it. The pessimists were winning. The five years away joke kept landing.

And then it started working. 127 million fully autonomous miles driven. A 90% reduction in serious injury crashes versus human drivers. 15 million rides in 2025 alone (3x 2024). Over 400,000 rides per week across six US metro areas.

They’re in Phoenix, San Francisco, LA, Austin, Atlanta, Miami. If you’ve ridden in one in one of those cities, the thing that strikes you is how fast it goes from feeling sci-fi to feeling normal. Now, they’re planning to launch in 20+ additional cities in 2026, including Tokyo and London. Saving lives around the globe.

My kids are never going to get their drivers’ licenses, are they?

(5) Contrary Tech Trends Report

Contrary Capital

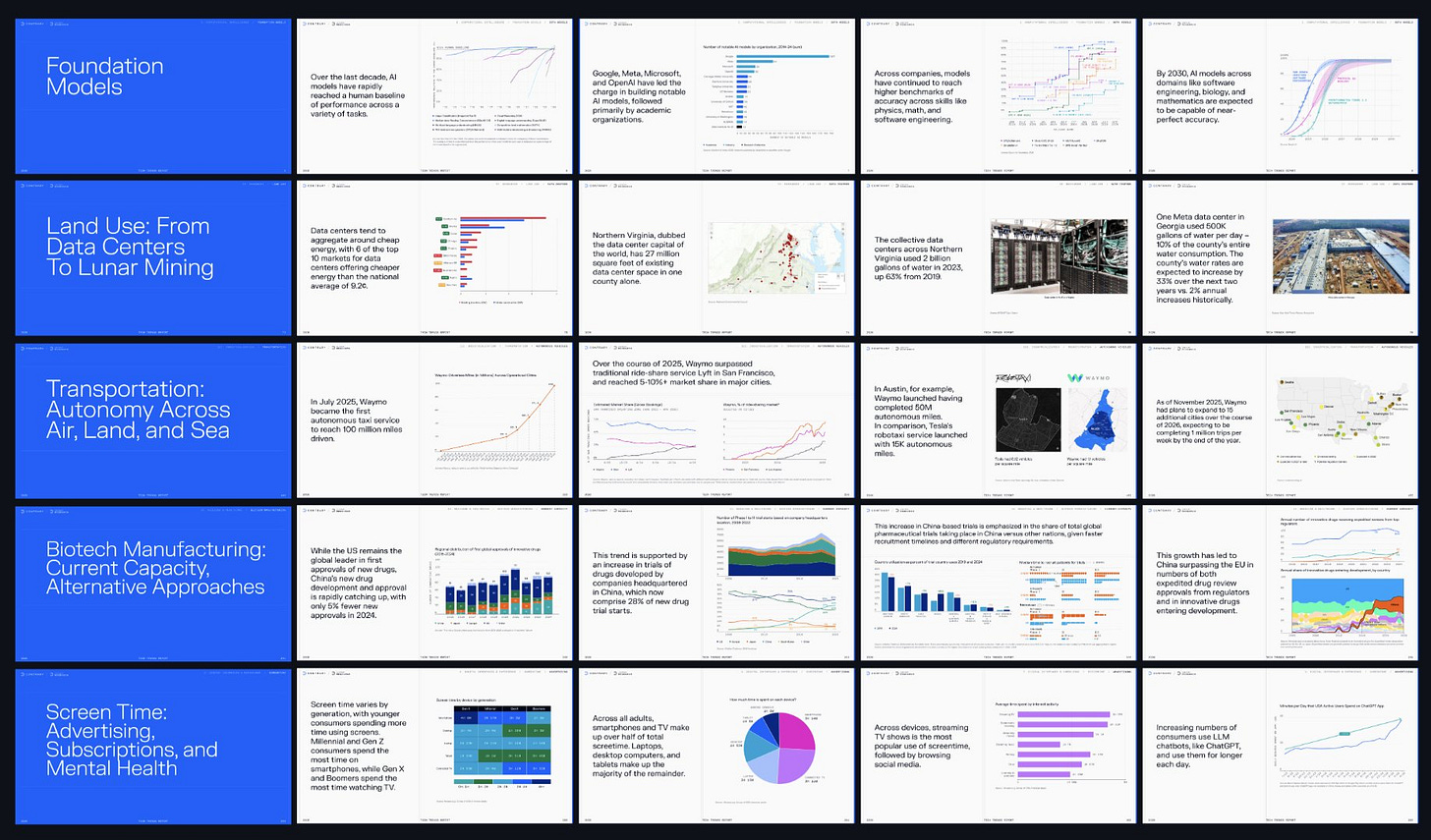

My friends at Contrary just dropped their annual Tech Trends Report, full of charts, data, and insights across a wide range of technological frontiers. It’s one of the most optimistic documents I’ve read in a while.

A few things jumped out. AI tools are reaching adoption speeds that make the internet’s growth curve look leisurely. OpenEvidence, an AI tool for doctors, hit 300,000 active prescribers in 11 months, a milestone that took Doximity, the previous standard-bearer, 11 years. ChatGPT is at 800 million weekly active users with retention rates approaching Google Search. And coding AI tools like GitHub Copilot, Cursor, and Claude Code are each approaching or at $1 billion in ARR. AI companies are reaching revenue milestones 37% faster than traditional SaaS companies did.

On energy, the numbers are staggering. Welcome to the ELECTRONAISSANCE. Total US electricity generation is projected to grow 35-50% by 2040, driven by data centers, EVs, and manufacturing. The country is investing $1.3 trillion in AI-related capital expenditure alone by 2027, and $3-5 trillion in global data center spending by 2030. Meanwhile, wind and solar are the fastest-growing energy sources globally, and US fab capacity is projected to grow 203% from 2022 to 2032, more than double the global average. America is building again.

And then there’s the frontier stuff. Lonestar Data Holdings sent a data storage unit to the moon in 2025. The report lays out how lunar bases could unlock helium-3 for clean fusion energy (which For All Mankind predicted), rare earth metals for EVs and batteries, and platinum group metals for hydrogen fuel cells. Artemis II, a crewed lunar flyby, is scheduled for April 2026. The US Space Force wants a 100kW nuclear reactor on the moon by decade’s end. Microsoft sank a data center underwater and saw 8x fewer hardware failures. 90% of US factories still operate without robots, which means we have a lot of productivity gains ahead.

There are challenges too, of course: aging grid infrastructure, water stress around data centers, the fact that 60% of CEOs say AI projects haven’t delivered positive ROI yet. But the overwhelming takeaway is that the buildout is happening, the adoption curves are real, and the scale of investment is unlike anything we’ve seen.

We are living in a sci-fi novel. What a time to be alive.

EXTRA DOSE (for not boring world subscribers) BELOW THE FOLD

Skyryse, Machina Labs, OpenAI x Gingko, General Matter x Mario

Keep reading with a 7-day free trial

Subscribe to Not Boring by Packy McCormick to keep reading this post and get 7 days of free access to the full post archives.