I, Exponential

Building Modern Cathedrals

Welcome to the 155 newly Not Boring people who have joined us since last Tuesday (August is slow!)! If you haven’t subscribed, join 211,079 smart, curious folks by subscribing here:

Today’s Not Boring is brought to you by... Fundrise

Venture capital has finally been democratized. You can now invest like the largest LPs in the world, gaining access to one of the best performing asset classes of the last 20+ years.

Fundrise is actively investing in some of the most prized private tech firms in the world—including those leading the AI revolution.

This first-of-its-kind product has removed nearly all of the typical barriers that gatekeep VC by offering:

No accreditation required.

No 2-and-20 fees.

No membership fees.

The lowest venture investment minimum ever offered.

Join America’s largest direct-access alternative asset manager and the more than 2 million people already using Fundrise.

Hi friends 👋,

Happy Tuesday!

Recently, I’ve noticed a lot of chatter about degrowth, the idea that we need to stop growing, consume less, go back to the way things were. I think it’s one of the dumbest and most dangerous ideas there is, modern Malthusianism.

To be clear: I do not think that we should, as Jane Goodall suggested, get rid of ~7 billion people to maybe save the planet. I think we should harness those peoples’ ideas and efforts to create abundance for even more people, all without harming the planet.

We should celebrate our progress, not for technology’s sake, but because it’s taken the work of billions of people over millennia to get here. So today, we’ll do that.

Dan told me to make this one much shorter, but I kept it long and full of messy details to express just how complex and improbable progress is.

Let’s get to it.

I, Exponential

“There is no reason to regret that I cannot finish the church. I will grow old but others will come after me. What must always be conserved is the spirit of the work, but its life has to depend on the generations it is handed down to and with whom it lives and is incarnated.”

-Antoni Gaudí

There’s a popular complaint, consistently good for thousands of agreeing likes, that because we no longer construct cathedrals, humans just don’t know how to build beautiful things anymore.

“We can’t. We don’t know how to do it.”

lol. lmao.

The implication that we can’t build beautiful things anymore, that we’ve lost our way, that we must reject modernity, is misguided and backwards.

Certainly, no one person, no matter how insanely gifted, knows how to make anything like the Duomo. No one person knows how to make anything, even a pencil. Collectively, though, we build things that make cathedrals look like simple LEGO sets.

Exponential technology curves are emergent cathedrals to humanity’s collective efforts.

I realize that statement might elicit a “I am begging tech bros to take just one humanities course.” But hear me out.

What makes exponential technology curves so beautiful to me is how improbable and human they are. They require an against-all-odds brew of ideas, breakthroughs, supply chains, incentives, demand signals, luck, setbacks, dead ends, visionaries, hucksters, managers, marketers, markets, research, commercialization, and je ne sais quoi to continue, yet they do.

I’ll show you what I mean by exploring three exponential technology curves: Moore’s Law, Swanson’s Law, and the Genome Law. All three required initial sparks of serendipity to even get off the ground, after which a multi-generational, global, maestroless orchestra has had to play the right notes, or the wrong ones at the right times, for decades on end in order for the sparks to turn into curves.

I think it’s easy to forget the human element when we talk about technology, or something as abstract as an exponential curve, but that’s exactly what drives technological progress: human effort. As Robert Zubrin put it in The Case for Nukes:

The critical thing to understand here is that technological advances are cumulative. We are immeasurably better off today not only because of all the other people who are alive now, but because of all of those who lived and contributed in the past.

The bottom line is this: progress comes from people.

Exponential technology curves emerge from humanity’s collective insatiable desire for more and better, and from the individual efforts of millions of people to fulfill that desire, often indirectly, in their own way.

Do you see how unbelievable these curves are? That they’re the Wonders of the Modern World?

I can tell you’re not with me yet. Hmmmm. What if we just considered the pencil, and worked our way up from there?

I, Pencil

Do you know how to make a pencil?

In 1958, Leonard E. Reed wrote a short letter, I, Pencil, from the perspective of the “seemingly simple” tool.

“Simple? Yet not a single person on the face of the earth knows how to make me.”

The pencil narrator writes of the trees that produce his wood, of the trucks, ropes, and saws used to harvest and cart the trees, and of the ore, steel, hemp, logging camps, mess halls, and coffee that help produce those trucks, ropes, and saws.

He writes of the millwork and the millworkers, the railroads and their communications systems, the waxing and kilning that go into his tint, into the making of the kiln, the heater, the lighting and power, the “belts, motors, and all the other things a mill requires.”

All of those things fit into three paragraphs; he goes on for another eight. But I’ll spare you. You get the point:

Actually, millions of human beings have had a hand in my creation, no one of whom even knows more than a very few of the others… There isn’t a single person in all these millions, including the president of the pencil company, who contributes more than a tiny, infinitesimal bit of know-how.

No one involved in the process knows how to make a pencil, but all contribute to the process, whether they intend to or not.

If that’s true of a pencil, imagine how little of the know-how that goes into a solar panel or semiconductor is contributed by any single person. It must be measured in nanometers.

I, Exponential

To tell the story of any exponential technology curve from the perspective of the curve itself would take more than an essay. It would fill a library. The pencil is but a miniscule input into any one of them, and even the pencil’s story is endlessly deep.

Scaling up to the level of semiconductors, solar panels, and DNA sequencing, each infinitely more complex than a pencil, and introducing exponential improvement into the mix, reveals the man-made miracle that is the exponential technology curve. I’ll tell their abridged stories on their behalf.

Moore’s Law: Semiconductors

Just as no one person knows how to make a pencil, no one fully understands the intricacies behind semiconductors.

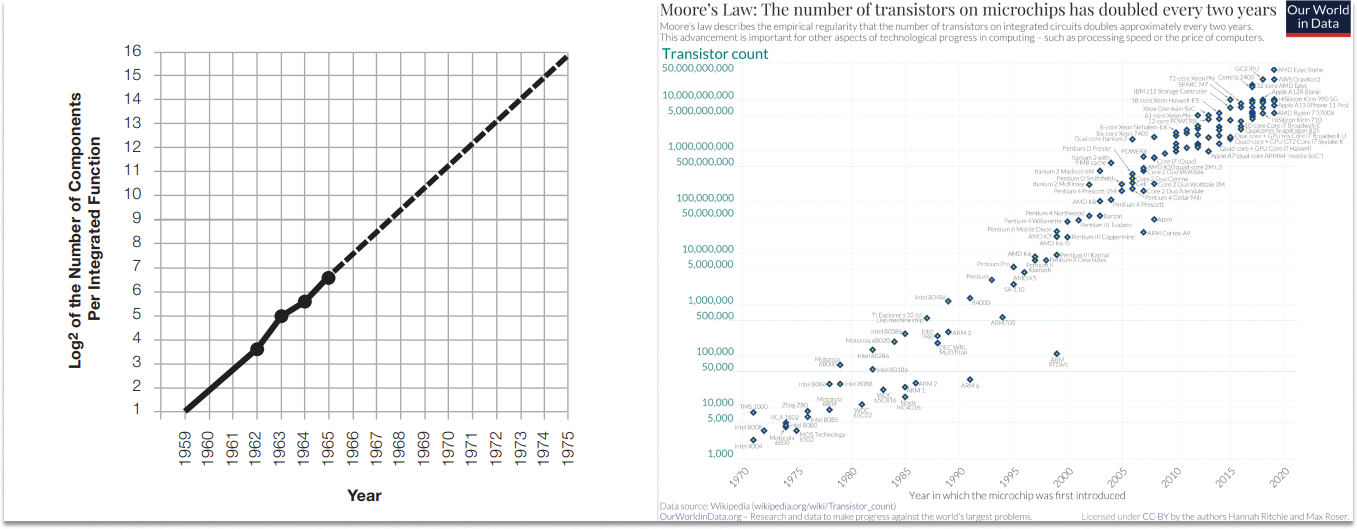

Moore’s Law is the most famous of the exponential technology curves. It states that the number of transistors on an integrated circuit doubles roughly every two years.

When Fairchild Semiconductors’ Gordon Moore wrote the 1965 paper that birthed the law – the wonderfully named Cramming more components onto integrated circuits – he only projected out a decade, through 1965.

Famously, though, Moore’s Law has held true through the modern day. Apple’s M1 Ultra chip packs the most transistors on a single commercial chip – 114 billion – and Intel recently said it expects there will be 1 trillion transistors on chips by 2030. When Moore wrote the paper, there were only 50.

Today, some are worried that Moore’s Law is nearing its end. How much smaller can we go than 3nm?! Others, including chip god Jim Keller, believe that we still have a lot of room to run.

Me? If you’re asking me to weigh in on semiconductor manufacturing and the limits of known physics, you’ve come to the wrong place. What I do know is that betting against Moore’s Law has been a losing bet for nearly seven decades.

Moore’s Law has been so consistent that we take it for granted, but its continuation has been nothing short of miraculous. There’s a rich story hidden beneath its curve.

In Chip War, author Chris Miller tells that story. He specifically calls out the breadth of roles that have contributed to maintaining Moore’s Law:

Semiconductors spread across society because companies devised new techniques to manufacture them by the millions, because hard-charging managers relentlessly drove down their cost, and because creative entrepreneurs imagined new ways to use them. The making of Moore’s Law is as much a story of manufacturing experts, supply chain specialists, and marketing managers as it is about physicists or electrical engineers.

That’s just one paragraph in a 464-page full of colorful characters and dizzying details. Like the precision achieved by ASML, the crucial Dutch company that makes the EUV lithography machines used to create transistors. MIT Technology Review explains the mind-bending precision of ASML’s mirror supplier, Zeiss:

These mirrors for ASML would have to be orders of magnitude smoother: if they were the size of Germany, their biggest imperfections could be less than a millimeter high.

There are many such stories, each of which is a nesting doll of its own stories. In order for Zeiss to manufacture such smooth EUV mirrors required centennial progress in fields like materials science, optical fabrication, metrology, ion beam figuring, nuclear research, active optics, vibration isolation, and even computing power – Moore’s Law – itself!

I asked Claude to come up with some rough napkin math on how many person-hours have gone into the creation and continuation of Moore’s Law. It came up with 5 billion over the past 60 years. When I pushed it to include adjacent but necessary industries, we got to 9-11 billion person hours across research, engineering, manufacturing, equipment development, materials science, computer manufacturing, software and application development, electrical engineering, and investing.

This is an extremely conservative estimate. If you were to take the I, Pencil approach, you’d need to include the people who mine the quartzite from which silicon is extracted, and all of the people who make all of the things that support their effort, as just one example. You’d need to include the many people over the centuries before 1959 whose efforts led to the first semiconductor in the first place. You’d have to include the teachers who taught the people who drove Moore’s Law forward, and maybe even the people who taught them. Certainly, you’d need to include the consumers and businesses whose demand for better compute and richer applications pulled the industry forward.

You could play this game ad infinitum, and at the end, all of those person-hours turn into a clean chart that looks like this:

There isn’t a single person in all these millions who contributes more than a tiny, infinitesimal bit to semiconductors’ progress, yet that progress flows so smoothly it’s called a Law.

Swanson’s Law: Solar Panels

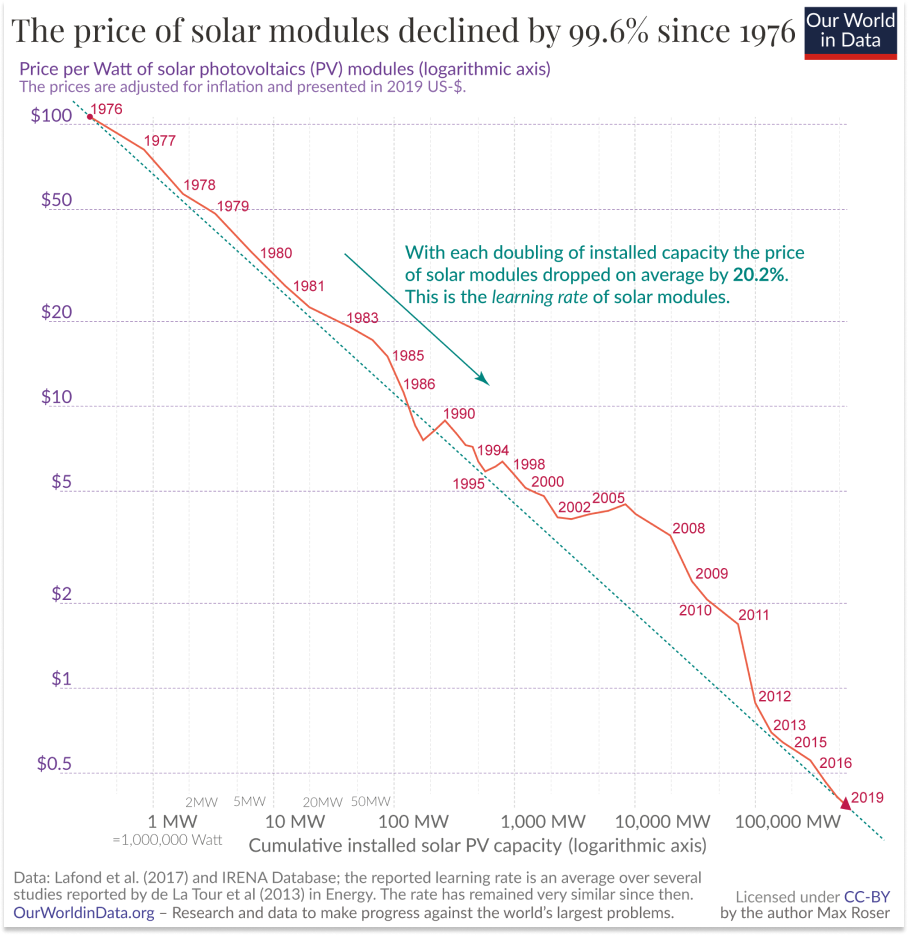

No one person knows how to pull electricity from the sun, either, but we do that, too, to the tune of 270 terawatt-hours (TWh) per year.

The solar story introduces another fun dimension to our exploration of exponentials: it’s impossible to pull them apart. One curve propels the next.

Moore’s Law is an important input into Swanson’s Law: the observation that the price of solar photovoltaic modules tends to drop 20 percent for every doubling of cumulative shipped volume.

The first modern solar PV cell was a direct result of research on transistors. In 1954, a Bell Labs scientist, Darrly Chapin, was trying to figure out how to power remote telephone stations in the tropics. He tried a bunch of things, including selenium solar PV cells, but they were just 0.5% efficient at converting sunlight into electricity. Not competitive. As luck would have it, though, a Bell Labs colleague named Gerald Pearson was doing research on silicon p-n junctions in transistors. Pearson suggested that Chapin replace the selenium cells with silicon ones, and when he did, silicon PV cells were 2.3% efficient. After further improvements by the Bell Labs scientists, they achieved 6% efficiency by the time Bell Labs announced its “Solar Battery” in 1954.

Ever since, progress against Moore’s Law has contributed to progress in solar panel price and efficiency through, among other things, thinner silicon wafers, precision manufacturing from fabs, advanced materials, and computer modeling. Innovation in chips spilled over to cells.

But semiconductors are just one of many, many factors that have driven down the price of solar PV cells.

That Bell Labs anecdote above comes from a phenomenal two-part essay by Construction Physics’ Brian Potter: How Did Solar Panels Get So Cheap? It’s the story behind the curve.

It’s easy to look at a curve like solar’s and simply credit Wright’s Law and learning curves – cost declines as a function of cumulative production – to trust that as we make more of something, we can make it more cheaply. In solar’s case, every doubling of cumulative production leads to a 20% decline in price. You can just plug the numbers into a spreadsheet, and voila!

But that approach misses all of the magic.

After the serendipitous encounter at Bell Labs, itself a study worthy of the excellent 422-page The Idea Factory, solar cells struggled to find a market. They were just too expensive, even for the Coast Guard and Forest Service. Chapin calculated that it would cost $1.5 million to power the average home with solar PV cells.

But in 1957, the Soviets launched Sputnik (powered by heavy, short-lived batteries). The Space Race was on. By March of the next year, the US launched the first solar powered satellite, Vanguard I.

Over the next 15 years, the US and USSR launched over 1,000 satellites, more than 95% of which were powered by solar PV cells, which were much lighter than batteries and provided energy nearly as long as the sun shone. As late as 1974, satellites made up 94% of the solar PV market. The cost fell 300% to $100 per watt on the back of satellite demand.

Without perfectly-timed demand from satellites, solar PV might have been DOA. I trust I don’t need to remind you to consider all of the work, research, and materials that went into getting satellites to space in the first place as an input into solar’s progress.

But even at $100 per watt, solar wasn’t competitive with other electricity sources for terrestrial use cases, but an entrepreneur – Elliot Berman – launched a company to change that. When his Solar Power Corporation failed to raise from venture capitalists, it found an unlikely backer in Exxon. Solar Power Corporation cut costs by “using waste silicon wafers from the computer industry” and using the full wafer instead of cutting off their rounded edges. They made trade-offs – “a less efficient, less reliable, but much cheaper solar PV cell” – and by 1973, they were able to “produce solar PV electricity for $10 per watt, and sell it for $20.”

I’m getting carried away in the details, I know. This stuff fascinates me. But the show must go on, so I highly suggest you read Potter’s posts (Part I & Part II) for the full story.

Suffice it to say, it’s a tale of entrepreneurship, government dollars, an international relay race across the US, Japan, Germany, and China, individual installation choices, an ecosystem of small businesses formed to finance and install panels, environmentalism, economies of scale, continued R&D, climate goals, and much, much more. The result is that in 70 years, solar has gone from the most expensive source of electricity to one of the cheapest thanks to the efforts of millions of people.

There isn’t a single person in all these millions who contributes more than a tiny, infinitesimal bit to solar’s progress, yet that progress flows so smoothly it’s called a Law.

The Genome Law: DNA Sequencing

Exponential technology curves even contribute to our knowledge of ourselves, even though not one of us knows how to catalog all of the base pairs that make up the DNA in our own bodies.

Over the past couple of years, I’ve spent enough time with Elliot and seen enough techbio pitches that this chart is seared in my brain:

It shows that since 2001, the cost to sequence a full human genome – to read the instructions encoded in the 3.2 billion DNA base pairs inside each person’s body – has fallen from $100 million to $500. Last September, after the chart was published, Illumina announced that they can now read a person’s entire genetic code for under $200, one step closer to their stated goal of $100.

The 500,000x decrease in 21 years far outstrips Moore’s Law; since 2007, the chart looks exponential even though it’s log scale. The Genome Law is the observation that the cost to sequence the complete human genome drops by roughly half every year.

Like semiconductors and solar panels, the story of the gene can fill a whole book. It does: Dr. Siddhartha Mukherjee’s excellent The Gene: An Intimate History. You should read it, too.

One thing Mukherjee failed to mention, though, is that Francis Crick was microdosing acid when he uncovered DNA’s double-helix structure, along with James Watson and Rosalind Franklin, in 1953. You can see it in his eyes, right?

Nor did Mukherjee mention the role that LSD played in DNA sequencing three decades later, when Cetus Corporation biochemist dropped some acid on the drive out to his cabin in Mendocino County, California.

While road tripping, Mullis had a eureka moment where he envisioned a process that could copy a single DNA fragment exponentially by heating and cooling it in cycles and using DNA polymerase enzymes. When he got to the cabin, he worked out the math and science behind his idea, and within a couple hours, he was confident that polymerase chain reaction (PCR), a way to amplify particular DNA sequences billions of times over, would work.

"What if I had not taken LSD ever; would I have still invented PCR?” Mullis later mused. “I don't know. I doubt it. I seriously doubt it."

Drugs are fun. These anecdotes are fun. But I hope they illustrate a larger point: progress that now seems inevitable was anything but.

Had Swiss chemist Albert Hofmann not accidentally absorbed the LSD he’d synthesized when researching compounds derived from ergot through his fingertips, we may not understand the human genome today. That he did (and then intentionally took a healthy 250 microgram dose to explore further) is one of many little things that had to go just right in order to sequence the genome.

Two years after Mullis invented PCR, the US Department of Energy and National Institutes of Health met to discuss sequencing the entire genome. By 1988, the National Resource Council Endorsed the idea of the Human Genome Project. And by 1990, the Human Genome Project (HGP) kicked off with $200 million in funding and James Watson at its head. At the core of the Human Genome Project’s process was Mullis’ PCR.

Government funding and coordination drove public and private innovation in sequencing technology. In 1998, an upstart competitor named Celera, led by now-legendary Craig Venter, entered the race, intending to finish the project faster and cheaper using a shotgun sequencing technique. Competition accelerated sequencing, and under political pressure – a startup upstaging the HGP would have been a black eye – the HGP and Celera jointly announced a working draft of the human genome in 2000:

By 2003, two years ahead of schedule and for a cost of $3 billion, humans had succeeded in mapping the human genome. And then the Genome Law started in earnest.

The story of genome sequencing’s cost declines will be familiar now.

Government funding played a role; in 2004, the US National Human Genome Research Institute kicked off a grant scheme known as the $100,000 and $1,000 genome programs, through which they awarded $230 million to 97 research labs and industrial scientists.

Private enterprise played a role, too. Illumina, which now employs nearly 10,000 people working to bring the cost of sequencing down, acquired a British company named Solexa for $600 million in 2007 to expand into next-generation sequencing. (Even investment bankers have a hand in these exponential technology curves!) Illumina integrated the technology that Solexa brought to the table into its product line, leading to the development of the widely-used sequencers like the HiSeq and MiSeq series, and more recently, the NovaSeq series.

Look at those machines. Without even considering the really challenging pieces inside, imagine the plastics, touchscreen, and feet pad manufacturers who provided components. Imagine the printer designers from whom Illumina’s industrial designers clearly cribbed notes. I couldn’t do what any one of them do, could you?

Moore’s Law has clearly played its part – providing the computational foundation for managing and analyzing the large datasets involved in genomics – but the Genome Law has primarily been fueled by domain-specific advancements in biotech and chemistry from scores of academic researchers and industry pros, and by massive parallelization. To understand those, you should read Elliot’s excellent piece on Sequencing.

Today, a number of companies are competing to bring down the cost of sequencing and open up more use cases, which in turn will drive down the cost of sequencing. One startup, Ultima Genomics, came out of stealth last May with an announcement that it can deliver the $100 genome. That’s promising, but remains to be seen. You can be sure, however, that the announcement spurred acceleration among competitors.

I’m leaving out the work of millions of people across generations, from Mendel to metallurgists, each of whom has played a small but important role.

There isn’t a single person in all these millions who contributes more than a tiny, infinitesimal bit to the progress in DNA sequencing, yet that progress flows so smoothly it’s called a Law.

When Exponentials Break

You’ll have to excuse me if I’ve gotten a little carried away. Reading back what I wrote, I may have made these curves seem a little too inevitable, a little too Law-like.

They’re not really laws, though. Not every technology improves exponentially, and even curves that start going exponential aren’t guaranteed to continue.

Nuclear energy – both fission and fusion – provide counterexamples.

Fission and Fusion

America first generated commercial power from fission with the opening of the Shippingport plant in 1957. By 1965, nuclear plants generated 3.85 TWh. By 1978, just 13 years later, nuclear power generation had grown 75x, reaching 290 TWh, good for a 40% CAGR. Had that growth rate continued for just another eight years, nuclear would have generated more electricity by 1986 (4,180 TWh) than the United States consumed in 2022 (4,082 TWh).

But the growth stopped. Nuclear power generation actually dipped in 1979, the year of the Three Mile Island accident. Three Mile Island often takes the blame for nuclear’s demise, but a confluence of factors – economic, regulatory, and cultural – killed nuclear’s growth.

Unpacking all of the factors would take an entire newsletter (or podcast series 👀), but for now, what’s important to understand is that continued exponential growth isn’t inevitable once it’s started. It takes will, effort, luck, demand, and a million other little things to go right, which makes it all the more incredible when it happens.

Fusion has fallen off, too. As Rahul and I discussed in The Fusion Race, “The triple product – of density, temperature, and time – is the figure-of-merit in fusion, and it doubled every 1.8 years from the late 1950s through the early 2000s.” That progress slowed, however, as the world’s governments concentrated its resources on the ambitious but glacial ITER project.

But there’s emergent magic in these exponential technology curves, and dozens of privately funded startups are filling in where governments left off and racing to get us back on track. If they do, we’ll shift from the more scientific triple product curve to a more commercial fusion power generation curve, and watch the wonders that unfold when that goes exponential itself.

Zooming up a level, despite the shortcomings in any one particular curve, what’s remarkable is that all of the curves - technological, cultural, economic - resolve into one big, smooth curve: the world’s GDP has increased over 600x since the dawn of the first millennia.

World GDP is an imperfect measure of progress, but it’s a pretty good one. It represents a better quality of life for more people; World GDP per capita is also increasing exponentially, even as the planet supports more human life. In fact, as Zubrin pointed out, “The GDP/capita has risen as population squared, while the total GDP has risen, not in proportion to the population size, but in proportion to the size of the population cubed!” The more people working on these curves, the faster they grow.

I think that’s what all of the curves represent at the most fundamental level: billions of people over millennia trying to make a better life for themselves and their families by contributing in the specific way they can, and succeeding in aggregate.

But where are the literal cathedrals?

Resurrecting Sagrada Família

Exponential technology curves, and even their direct products, are beautiful but hidden.

Semiconductors are hidden inside of computers. Solar panels are visible but not particularly beautiful. DNA sequencing takes place in a lab.

These things are awesome, but I understand if you don’t think they’re as beautiful as the Duomo.

Well, consider the Basilica de la Sagrada Família.

In 1883, a small group of Catholic devotees of Saint Joseph (Josephites) entrusted a young Catalan architect, Antoni Gaudí, to build them a church.

Gaudí, as anyone who has been to Barcelona will gladly tell you, was a genius. He was also slow and unconventional. As architect Mark Foster Gage writes in a piece for CNN:

Gaudí’s vision of the church was so complex and detailed from the start that at no point could it be physically drawn by hand using the typical scale drawings so common to almost all architectural projects. Instead, it was almost entirely constructed through the making of large plaster models to communicate Gaudí’s desires to the army of stonemasons slowly liberating its form from blocks of local Montjuïc sandstone.

That worked well enough while Gaudí was there to oversee the models and the stonemasons, but in 1926, the architect was hit by a tram car. Dressed poorly and with nothing but snacks in his pocket, Gaudí was mistaken for a pauper and not given proper care. He died within days.

When Gaudí died, 43 years after taking on the project, Sagrada Família was only 10-15% completed. He did leave behind sketches, photographs, and plaster models that guided builders for the next decade. Those, unfortunately, were destroyed in 1936, during the Spanish Civil War, when revolutionaries burned the sketches and photographs, smashed the models, and desecrated Gaudí’s tomb.

Over the next four decades, construction snailed along in fits and starts. Gage explains that “Even after nearly a full century of construction, by 1978 there remained numerous aspects of the project that had still never been designed, and even more that nobody knew how to build.”

“We can’t. We don’t know how to do it.” for real.

In 1979, however, a 22-year-old Kiwi Cambridge grad student, Mark Burry, visited Sagrada Família and interviewed some of Gaudí’s former apprentices. They showed him the boxes of broken model fragments, and offered him an internship. Burry got to work trying to reconstruct the mind of Antoni Gaudí in order to construct the church that lived within.

At first, Burry tried to hand-draw the “complex intersection of weird shapes, including things like conoids and hyperbolic paraboloids” but he realized that the tool wasn’t up to the task. Gage again:

Burry realized as early as 1979 that the only tool that could possibly calculate, in a reasonable amount of time, the structures, forms, and shapes needed to solve the remaining mysteries of the Sagrada Família was one relatively new to the consumer world: the computer.

Moore’s Law, baby!

Gage’s piece from which I learned this story is titled How robots saved one of the world’s most unusual cathedrals. It’s the story of how, after nearly a century of slow progress against one man’s genius design, technologies built over billions of person-hours by millions of people are finally bringing Sagrada Família to life.

Burry brought in software used to design airplanes to solve the otherwise-impossible problem of translating Gaudí’s sketches of bone columns into 3D models.

In order to actually construct the building, Burry and team hooked their computers up to a relatively new invention, CNC (computer numerically controlled) machines. CNC machines were themselves a product of a number of technological advances in computing power, data storage, electronics, motors, material science, user interfaces, networking and connectivity, control systems, and software designs. All of those curves converged in time for Burry to feed his 3D models into CNC machines that could precisely carve their designs out of stone.

Today, the team working on Sagrada Família uses a full arsenal of modern technology, from 3D printers to Lidar laser scans, from sensors to VR headsets. The official architecture blog of the Sagrada Família even put together a short video on the role of technology in the building’s construction:

Sagrada Família is an architectural wonder, maybe the greatest in the world. Brunelleschi could not have built it with the tools and labor available when he conceived of the Duomo. Architects and builders have struggled for 140 years to complete it. Burry and team are now racing to complete it by 2026 to mark the centennial of Gaudí’s death.

Thanks to the exponential technology curves built by billions of people, and Burry’s application of them, “We can. We do know how to do it.”

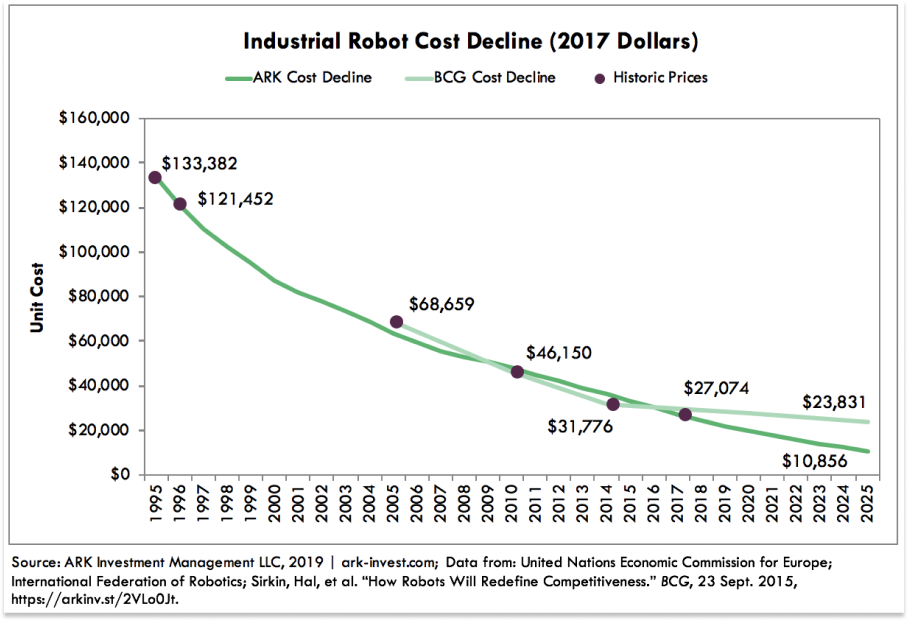

That just leaves the question of will and economics. One of the reasons we don’t build churches and stone buildings like we used to is that they just don’t make financial sense. The modern Medicis are funding modern pursuits, like space travel and longevity.

But there’s cause for hope. Building the next Sagrada Família, and the next, and the next, will get cheaper and faster as the physical world hops onto exponential technology curves, and those curves continue to exponentiate. Modeling will get better, robots will get cheaper and better, 3D printing will get faster and more accurate.

Eventually, we’ll have the machines that can build the machines that can build Sagrada Familias.

The claim that exponential technology curves are more beautiful than cathedrals is a bold one, but I stand by it.

You can’t use cathedrals to make exponential technology curves, but you can use exponential technology curves to make cathedrals. And exponential technology curves continue to expand our capabilities, so that we can build even greater cathedrals, literal and metaphorical, as time goes on.

Less glibly, we should be able to appreciate the beauty of the past while celebrating the tremendous things humans have accomplished since and working to make the future even better.

To use the same G.K. Chesterton quote that Read did in I, Pencil: “We are perishing for want of wonder, not for want of wonders.”

There are wonders all around us. We should wonder at these curves the same way we wonder at the ceiling of the Sistine Chapel, not for the technological progress they represent alone, but because they show us what humans, from the lumberjack to the Nobel Laureate, are capable of.

Trust the Curves

Let’s wrap this up on a practical note. Exponential technology curves aren’t just beautiful, they’re investible.

A key pillar of Not Boring Capital’s Fund III thesis is that enough of these curves are hitting the right spot that previously impossible or uneconomical ideas now pencil out.

Understanding and betting on exponential technology curves is the surest way for entrepreneurs and investors to see the future. With each doubling in performance or halving in price, ideas that lived only in the realm of sci-fi become possible in reality.

We just invested in a company whose “Why Now?” is that they’re building at the intersection of two powerful curves that are about to hit the right point. They’re working on a holy grail idea that others have tried and failed to build, because they tried a little too early on the curves.

This logic is why many of the best startup ideas can be found in previously overhyped industries. Ideas that were once too early eventually become well-timed.

One of my favorite examples is Casey Handmer’s Terraform Industries, which is using solar to pull CO2 from the air and turn it into natural gas. The company is an explicit bet on solar’s continued price decline, something that even the experts continue to underestimate by overthinking it, and an effort to bend the curve even faster by creating more demand.

New companies enabled by new spots on the curve, in turn, create more demand, which sends capitalist pheromones throughout the supply chain that entice people, in their own self-interest, to make the breakthroughs and iterations that keep the curves going. That makes existing companies’ unit economics better, and unlocks newer, wilder ideas.

On the flip side, curves explain why it’s been so hard to create successful startups that don’t sit at the edge of the curve. As Pace Capital’s Chris Paik explained on Invest Like the Best, “One thing that I think is underexplored is the impact of data transfer speeds as actual why now reasons for companies existing. Let's look at mobile social networks and the order that they were founded in; Twitter 2006, Instagram 2010, Snapchat 2011, maybe most recently TikTok named Musical.ly in 2014.”

He argued that the most successful mobile social networks are the ones that were born right when they could take advantage of a new capability unlocked by advances in mobile bandwidth: text, images, short clips, full videos. In other words, successful social networks built right at the leading edge of Nielsen’s Law, which states that a high-end user’s connection speed grows by 50% each year.

The flip side was true, too. Paik made the case that Instagram couldn’t have been successful if it had come after TikTok, and that companies like BeReal and Poparazzi struggled because they are “not strictly more bandwidth consumptive than TikTok.”

Getting curve timing right isn’t the only factor that determines whether a startup will be successful. There are companies that get the timing just right, but fail for a number of reasons: they’re not the right team, they have the wrong strategy, others build against the same curve they do but better, their product sucks, whatever. There are companies that fail because they think they’re building at the right spot on the curve, but are actually too early. And there are companies that succeed despite not building at the leading edge of the curve.

But building and investing in technology startups without at least understanding and appreciating exponential technology curves will lead to more heartache and write downs than is necessary.

That said, the crazy ones who ignore the curves are important, too. Jumping in too early and overhyping a technology before it’s technically and economically rational to do so is one of the many things that keep curves going against the odds. That internet bandwidth curve might look a lot flatter if telecom companies didn’t get too excited and overbuild capacity during the Dot Com Bubble.

It didn’t work out for them, but it worked out for humanity. Even the failures push us forward.

How beautiful is that?

Thanks to Dan for editing!

That’s all for today! We’ll be back in your inbox on Friday with the Weekly Dose before taking next week’s essay off for Labor Day Weekend.

Thanks for reading,

Packy

Yes, but... technology, no matter how advanced is a tool in the hands of humans. Human behaviors are governed by social norms and belief systems. While our technologies grew exponentially our social adaptations are still rooted in survival, our morals still lack ethical clarity, and our collective beliefs are so outdated I can't help but think of this situation as "the monkey with a blaster" scenario. Look at our political and economic systems! Who's controlling the tech, its development and application? We already have everything to make life on earth a paradise, but we don't because of the above.

FINALLY. Someone (and only you could have done this) has beautifully explained (with examples!!!) the type of awe I feel on a daily basis when I go "look at those machines." Yes. Among the doomer ideas that plague modern society, this piece stands out as the antidote. THIS is the techno-optimism I'm subscribed for, and THIS is what I hope you never stop writing about! Keep up the epic work, Packy (and Dan)!