Welcome to the 408 newly Not Boring people who have joined us since last week! If you haven’t subscribed, join 216,703 smart, curious folks by subscribing here:

The sourcing tool for data driven VCs

Harmonic AI is the startup discovery tool trusted by VCs and sales teams in search of breakout companies. It’s like if Crunchbase or CB Insights was built today and without a bunch of punitive paywalls. Accel, YC, Brex and hundreds more use Harmonic to:

Discover new startups in any sector, geography, or stage including stealth.

Track companies’ performance with insights on fundraising, hiring, web traffic, and more.

Monitor their networks for the next generation of founders.

Whether you're an investor or GTM leader, Harmonic is just one of those high-ROI no-brainers to have in your stack.

Hi friends 👋 ,

Happy Tuesday! Hope you all had a great Thanksgiving (or enjoyed the peace and quiet while us Americans were in Turkey comas).

Apologies that this is a little late — once again, the newsletter gods dropped a perfect example of the point I was trying to make in my lap at the last minute, and I’ve been up since 5:30 trying to incorporate it.

We live in a time of extreme narratives. It’s easy to get caught up and worked up when you take the extremes in isolation. Don’t. They’re part of a bigger game, and once you see it, the world makes a lot more sense.

Let’s get to it.

Narrative Tug-of-War

One of the biggest changes to how I see the world over the past year or so is viewing ideological debates as games of narrative tug-of-war.

For every narrative, there is an equal and opposite narrative. It’s practically predetermined, cultural physics.

One side pulls hard to its extreme, and the other pulls back to its own.

AI is going to kill us all ←→ AI is going to save the world.

What starts as a minor disagreement gets amplified into completely opposing worldviews. What starts as a nuanced conversation gets boiled down to catchphrases. Those who start as your opponents become your enemies.

It’s easy to get worked up if you focus on the extremes, on the teams tugging the rope on each side. It’s certainly easy to nitpick everything they say and point out all of the things they missed or left out.

Don’t. Focus on the knot in the middle.

That knot, moving back and forth over the center line as each team tries to pull it further to their own side, is the important thing to watch. That’s the emergent synthesis of the ideas, and where they translate into policy and action.

There’s this concept called the Overton Window: the range of policies or ideas that are politically acceptable at any given time.

Since Joseph Overton came up with the idea in the mid-1990s, the concept has expanded beyond government policy. Now, it’s used to describe how ideas enter the mainstream conversation where they influence public opinion, societal norms, and institutional practices.

The Overton Window is the knot in the narrative tug-of-war. The teams pulling on either side don’t actually expect that everyone will agree with and adopt their ideas; they just need to pull hard enough that the Overton Window shifts in their direction.

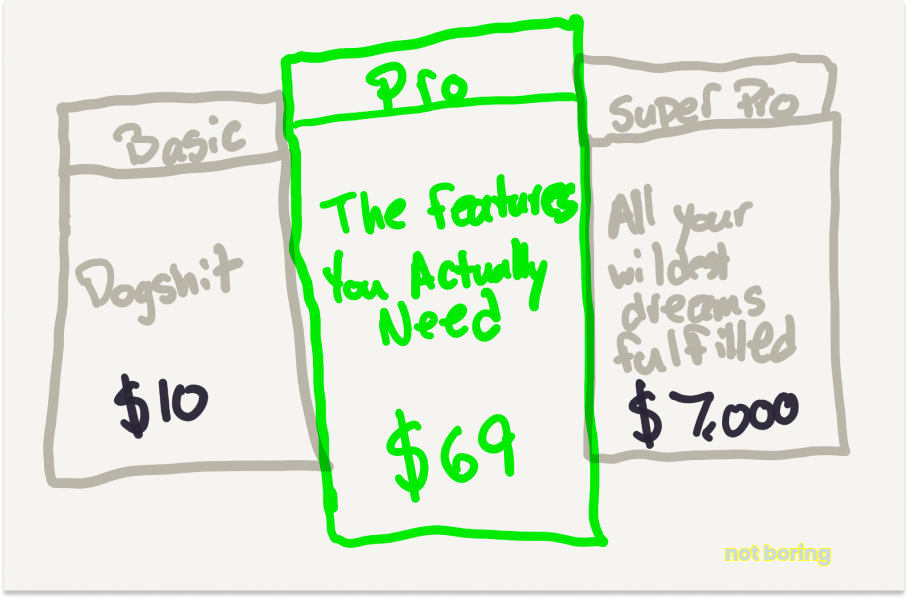

Another way to think about it is like price anchoring, when a company offers multiple price tiers knowing that you’ll land on the one in the middle and pay more for it than you would have without seeing how little you get for the lower price or how much you’d have to pay to get all of the features.

No one expects you to pay $7,000 for the Super Pro tier (although they’d be happy if you did). They just know that by showing it to you, it will make paying $69 for the Pro tier more palatable.

The same thing happens with narratives, but instead of one company carefully setting prices to maximize the likelihood that you buy the Pro tier, independent and opposed teams, often made up of people who’ve never met, loosely coordinated through group chats and memes, somehow figure out how to pull hard enough that they move the knot back to what they view as an acceptable place. It’s a kind of cultural magic when you think about it.

There are a lot of examples I could use to illustrate the idea, many of which could get me in trouble, so I’ll stick to what I know: tech. Specifically, degrowth vs. growth, or EA vs. e/acc.

EA vs. e/acc

One of the biggest debates in my corner of Twitter, which burst out into the world with this month’s OpenAI drama, is Effective Altruism (EA) vs. Effective Accelerationism (e/acc).

It’s the latest manifestation of an age-old struggle between those who believe we should grow, and those who don’t, and the perfect case study through which to explore the narrative tug-of-war.

If you look at either side in isolation, both views seem extreme.

EA (which I’m using as a shorthand for the AI-risk team), believes that there is a very good chance that AI is going to kill all of us. Given the fact that there will be trillions of humans in the coming millennia, even if there’s a 1% chance AI will kill us all, preventing that from happening will save tens or hundreds of billions of expected lives. We need to stop AI development before we get to AGI, whatever the cost.

As that team’s captain, Eliezer Yudkowsky, wrote in Time:

Shut down all the large GPU clusters (the large computer farms where the most powerful AIs are refined). Shut down all the large training runs. Put a ceiling on how much computing power anyone is allowed to use in training an AI system, and move it downward over the coming years to compensate for more efficient training algorithms. No exceptions for governments and militaries. Make immediate multinational agreements to prevent the prohibited activities from moving elsewhere. Track all GPUs sold. If intelligence says that a country outside the agreement is building a GPU cluster, be less scared of a shooting conflict between nations than of the moratorium being violated; be willing to destroy a rogue datacenter by airstrike.

The idea that we should bomb datacenters to prevent the development of AI, taken in a vacuum, is absurd, as many of AI’s supporters were quick to point out.

e/acc (which I’m using as a shorthand for the pro-AI team) believes that AI won’t kill us all and that we should do whatever we can to accelerate it. They believe that technology is good, capitalism is good, and that the combination of the two, the techno-capital machine, is the “engine of perpetual material creation, growth, and abundance. We need to protect the techno-capital machine at all costs.

Marc Andreessen, who rocks “e/acc” in his twitter bio, recently wrote The Techno-Optimist Manifesto, in which he makes the case for essentially unchecked technological progress. One section in particular drew the ire of AI’s opponents:

We have enemies.

Our enemies are not bad people – but rather bad ideas.

Our present society has been subjected to a mass demoralization campaign for six decades – against technology and against life – under varying names like “existential risk”, “sustainability”, “ESG”, “Sustainable Development Goals”, “social responsibility”, “stakeholder capitalism”, “Precautionary Principle”, “trust and safety”, “tech ethics”, “risk management”, “de-growth”, “the limits of growth”.

This demoralization campaign is based on bad ideas of the past – zombie ideas, many derived from Communism, disastrous then and now – that have refused to die.

The idea that things most people view as good – like sustainability, ethics, and risk management – taken in a vacuum, seems absurd, as many journalists and bloggers were quick to point out.

What critics of both pieces either missed is that neither argument should be taken in a vacuum. Nuance isn’t the point of any one specific argument. You pull the edges hard so that nuance can emerge in the middle.

While there are people on both teams who support their side’s most radical views – a complete AI shutdown on one side, unchecked techno-capital growth on the other – what’s really happening is a game of narrative tug-of-war in which the knot is regulation.

EA would like to see AI regulated, and would like to be the ones who write the regulation. e/acc would like to see AI remain open and not controlled by any one group, be it a government or a company.

One side tugs by warning that AI Will Kill Us All in order to scare the public and the government into hasty regulation, the other side tugs back by arguing that AI Will Save the World to stave off regulation for long enough that people can experience its benefits firsthand.

Personally, and unsurprisingly, I’m on the side of the techno-optimists. That doesn’t mean that I believe that technology is a panacea, or that there aren’t real concerns that need to be addressed.

It means that I believe that growth is better than stagnation, that problems have solutions, that history shows that both technological progress and capitalism have improved humans’ standard of living, and that bad regulation is a bigger risk than no regulation.

While the world shifts based on narrative tug-of-wars, there is also truth, or at least fact patterns. Doomers – from Malthus to Ehrlich – continue to be proven wrong, but fear sells, and as a result, the mainstream narrative continues to lean anti-tech. The fear is that restrictive regulation is put in place before the truth can emerge.

Because the thing about this game of narrative tug-of-war is that it’s not a fair one.

The anti-growth side needs only to pull hard and long enough to get regulation enacted. Once it’s in place, it’s hard to overturn; typically, it ratchets up. Nuclear energy is a clear example.

If they can pull the knot over the regulation line, they win, game over.

The pro-growth side has to keep pulling for long enough for the truth to emerge in spite of all the messiness that comes with any new technology, for entrepreneurs to build products that prove out the promise, and for creative humans to devise solutions that address concerns without neutering progress.

They need to keep the tug-of-war going long enough for solutions to emerge in the middle.

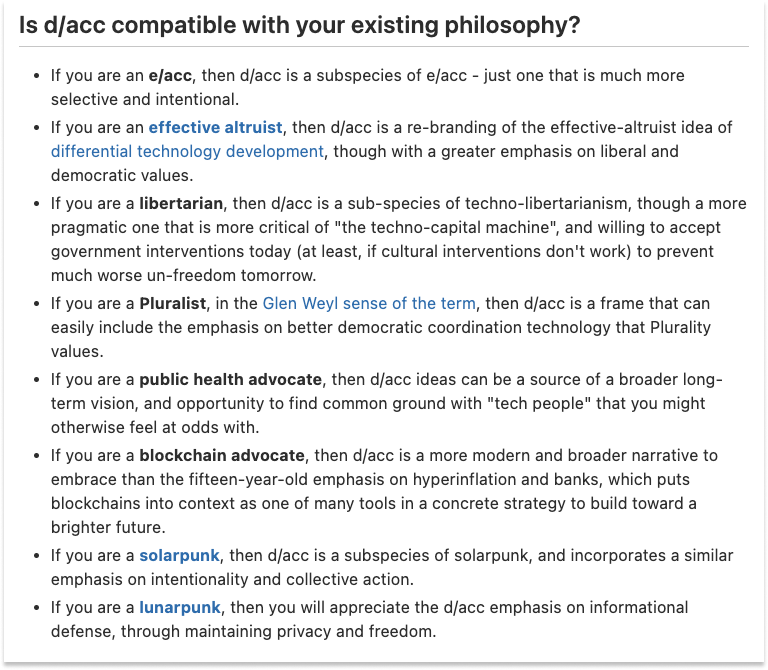

Yesterday, Ethereum co-founder Vitalik Buterin wrote a piece called My techno-optimism in which he proposed one such solution: d/acc.

The “d,” he wrote, “can stand for many things; particularly, defense, decentralization, democracy and differential.” It means using technology to develop AI in a way that protects against potential pitfalls and prioritizes human flourishing.

On one side, the AI safety movement pulls with the message: “you should just stop.”

On the other, e/acc says, “you’re already a hero just the way you are.”

Vitalik proposes d/acc as a third, middle way:

A d/acc message, one that says "you should build, and build profitable things, but be much more selective and intentional in making sure you are building things that help you and humanity thrive", may be a winner.

It’s a synthesis, one he argues can appeal to people whatever their philosophy (as long as the philosophy isn’t “regulate the technology to smithereens”):

Without EA and e/acc pulling on both extremes, there may not have been room in the middle for Vitalik’s d/acc. The extremes, lacking nuance themself, create the space for nuance to emerge in the middle.

If EA wins, and regulation halts progress or concentrates it into the hands of a few companies, that room no longer exists. If the goal is to regulate, there’s no room for a solution that doesn’t involve regulation.

But if the goal is human flourishing, there’s plenty of room for solutions. Keeping that room open is the point.

Despite the fact that Vitalik explicitly disagrees with pieces of e/acc, both Marc Andreessen and e/acc’s pseudonymous co-founder Beff Jezos shared Vitalik’s post. That’s a hint that they’re less worried about their solution than a good solution.

Whether d/acc is the answer or not, it captures the point of tugging on the extremes beautifully. Only once e/acc set the outer boundary could a solution that involves merging humans and AI through Neuralinks be viewed as a sensible, moderate take. Ray Kurzweil made that point a couple decades ago and has the arrows to prove it.

In this and other narrative tug-of-wars, the extremes serve a purpose, but they are not the purpose. For every EA, there is an equal and opposite e/acc. As long as the game continues, solutions can emerge from that tension.

Don’t focus on the tuggers, focus on the knot.

Thanks to Dan for editing!

That’s all for today. We’ll be back in your inbox with the Weekly Dose on Friday!

Thanks for reading,

Packy

This article is a good reminder to avoid extremism, and an explanation of the concept of Overton windows. However, I have two critiques of it.

The first critique is that it's a bad summary of the AI x-risk / EA position. The first people who cared about AI x-risk were radical transhumanists who want aligned superintelligent AGI to create an unimaginably prosperous world. For an example, Bostrom's "Letter from Utopia" ( https://nickbostrom.com/utopia ) describes starting with abolishing death and massively enhancing human intelligence. In my experience, the culture in AI x-risk circles remains one where space colonisation, great personal liberty, and near-unlimited prosperity for everyone are taken for granted when talking about what the future should be like. What's changed since the early days is that some people have gotten very pessimistic about the difficulty of the technical AI alignment problem. (My own stance is that as a civilisation, we have not made much of an effort on technical alignment yet, so it's too early to put massive odds against a solution)

My second critique is that this article it seems like an example of one of the main cultural forces standing in the way of growth.

The object-level question is: under what conditions will AI cause human extinction? Experts disagree on this but very few serious people think that this is an obvious question either way. There is a lot of technical work being done to find the answer.

Now what the article does, in effect, is say this: forget the object-level, technical question. The real question is about narratives (i.e. politics and power). And the solution? There's no mention of technical work or science, or even any tricky object-level question (it's assumed everything is political) -- the message is to look at the positions and assume that the middle position is right. If all discourse consisted of political games along a 1d axis, then yes, eventually there's probably a compromise in the middle (or no solution at all). But in this, the key updates on what we should do are going to come from future breakthroughs in AI, which in turn depend on the brute facts of what is technically feasible. Everyone's (hopefully) on one team when it comes to thinking that apocalypse is bad. And if I had to draw a line between two sides, the most salient one I see is between those trying to use AI as a case study for their own political battles, and those who are trying to build beneficial tech and carefully wring bits of information out of reality so we know how to do so.

I think a big reason why our civilisation is under-performing on growth is that politicking and narrative-spinning is taking over discourse, which in turn drives policy and culture. Twitter (and to a lesser but still relevant extent, Substack) selects hard for virality, and narratives are more viral than tech or science. Sometimes an issue should not be shoe-horned into a political narrative, and sometimes you have to stop spinning narratives and engage with the actual tech and the actual science.

For another alternative (or synthesis some might say) check out this piece on Technorealism https://boydinstitute.org/p/the-technorealist-alternative